Prompt engineering – How do I tell the AI? Enhancing communication with Artificial Intelligence

What once sounded like science fiction is now reality: machines that can learn, think, and even be creative.

But how do you communicate with AI? How do you get it to produce exactly the content you have in mind? Because an AI doesn’t think like a human—it detects patterns in data. That’s why a different style of communication is needed. This is where the technique of prompt engineering comes in.

What is prompt engineering?

Think of an AI model as an omniscient toddler. It has access to an incredible amount of knowledge – but it needs clear instructions to use that knowledge in a meaningful and contextual way.

Prompt engineering refers to this very technique: asking the AI the right questions to get the best possible answers. It also involves setting the right context – showing the AI the context in which its answer should fit.

Challenges in prompt engineering

Prompt engineering is a very new technique. While it may seem easy to learn, it presents several challenges in the field of technical communication:

Quality of responses

One of the biggest challenges in working with AI models is the often unpredictable nature of the responses. They can be long-winded, unstructured, or off-topic – which is particularly problematic in technical contexts.

Another issue is hallucination, where the AI generates plausible-sounding but factually incorrect information that may not be immediately recognized as false.

Complexity of tasks

Tasks in technical contexts are usually complex and multi-step. A simple query usually won't provide a satisfactory answer.

Quality of data

An AI can only learn from what is available. If the data base is too small, vague, or ambiguous, the AI will produce inaccurate results.

Prompt engineering methods: Here's how it works

There are a few methods for engineering prompts to get better results from the AI:

Zero-shot method

The AI solves a task without having seen an example. It uses its general knowledge and contextual understanding. This method is useful when no examples are available. It is often used as a first draft for further refinement.

Example:

"Create a safety warning for a pressure washer."

- The AI generates an appropriate warning based on its general knowledge and typical security formats – without you having to provide an example. The result:

⚠ Safety Warning – Pressure Washer

Caution! Improper use can result in serious injury or property damage.

One-shot method

The AI sees exactly one example before solving a task. Use this when it’s difficult to provide multiple examples is difficult, when time is short, or when zero-shot wasn’t effective. One example may improve the result.

Example:

Provide the following example: "Warning: Wear protective gloves to avoid injury from hot surfaces."

Then you ask: "Create a similar warning for working with power lines."

- The AI applies the pattern to the new context. The result is as follows:

⚠ Warning: Risk of electric shock!

Work on power lines only with proper protective gear and after power is disconnected.

Few-shot method

You provide a few examples (typically 2-10) before the AI performs the task. These examples help the AI better understand the context.

Example:

You give the AI three examples of how to use a product, say, taken from a product manual:

- "To turn on the device, press and hold the power button for 3 seconds."

- "To replace the battery, open the back of the device."

- "To connect to Wi-Fi, open the Network Settings menu."

Your prompt: "Write an instruction to reset to factory settings."

- The AI recognizes the format and provides a consistent description:

"To reset to factory settings, open the System menu and select Reset to Factory Settings.

Prompt chaining

Prompt chaining is an iterative technique for refining and developing prompts, where the AI’s responses are improved step by step. This method is especially useful when a complex task can't be solved with a single prompt.

Instead, the task is broken down into smaller, more manageable subtasks. This way, specific intermediate goals can be defined, acting as fine-tuning levers to significantly enhance the quality of the final result.

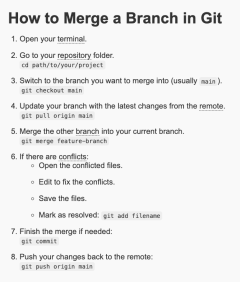

A typical use case: You want to create instructions on how to merge a branch in Git. Instead of trying to cover everything in a single prompt, you break the process down – for example, into preparing the repository, identifying the target branch, and finally, carrying out the merge itself. With each iteration, your prompts become more targeted, leading to clearer and more useful responses.

You’ll find the full chat process after step 4.

1) Ask the question

First prompt: "Write a how-to on merging a branch in Git."

→ Clarifies the intent.

2) Add context

Second prompt: "Write a numbered beginner’s guide. Style: minimalist. Define technical terms."

→ Adds clarity and audience targeting.

3) Define output format

Third prompt: "Output as HTML page with basic CSS. Use tooltips for definitions."

→ Provides formatting instructions.

4) Provide example

In the last step, you could give a concrete example – for example, what an HTML output should look like, or how terms should be explained. We asked ChatGPT to create a responsive page and add small icons to the steps.

→ Final refinement.

There is no fixed order for these steps, but different approaches can yield significantly different results.

Another key lesson in prompt engineering: there are no hard and fast rules. Trial and error, experimentation, and constant adjustment are all essential parts of the process. What works well in one situation might fail in another.

Best practices for prompt engineering

In summary, we can derive the following best practices for prompt engineering:

- Use iteration: The first answer is rarely the best. Refine step by step.

- Be concise and clear: Precise prompts minimize misinterpretation.

- Provide context with roles: Tell the AI its role, e.g., “You are a technical writer.”

- Verify output: AI provides likely answers, not guaranteed facts. Always check the output.

- Adapt continuously: There's no “right” way. Testing and iteration are essential.

When is prompt engineering worthwhile?

Whether prompt engineering is worthwhile depends on the specific application. In general, the following scenarios are particularly well-suited:

- Recurring tasks: Save and reuse well-written prompts to save time and ensure consistency.

- Specific use cases: Customized prompts deliver reliable, fast results when the context is well defined.

- Lack of technical expertise: Even non-experts can use well-tested prompts to effectively address complex issues.

Conclusion

Prompt engineering is a key method for improving interactions with AI systems. By carefully crafting our prompts and iteratively refining the results, the AI responses become more accurate and useful. But it’s important to understand the limitations of this technique and to always critically assess whether the AI is delivering what you need.